Use trained models with Auto-Annotate

Equip V7 and your own models in auto annotate

The Model Tool

The Model Tool gives you the ability to combine the Auto-Annotate tool with models trained on your data. Choose from trained instance segmentation, object detection, or classification models, and label specific regions or entire files with your own trained models according to confidence thresholds that you can set.

The Model Tool will output polygons, bounding boxes, text, and tags with a corresponding confidence score for each annotation.

To use the model tool:

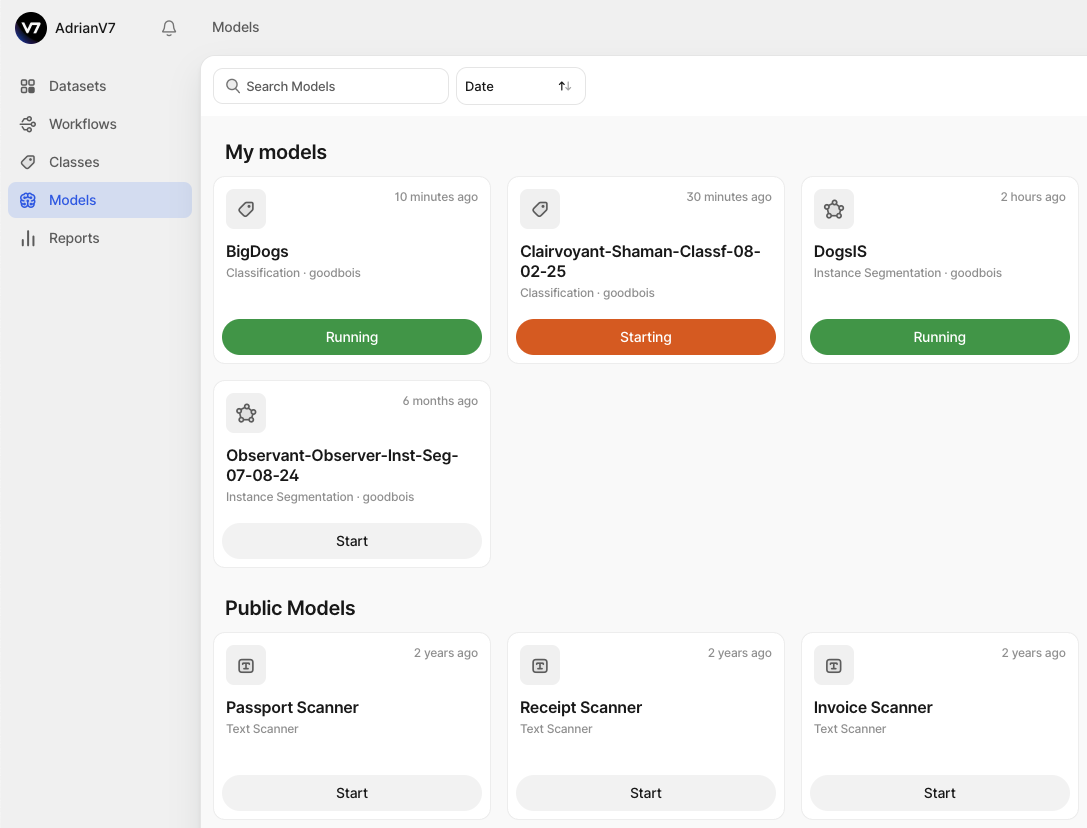

1. Start your models

Head to the Neural Networks page and start any of your trained models.

2. Equip Auto-Annotate

Return to the workview and equip Auto-Annotate. Any running models will appear in the model dropdown.

3. Map classes

Click Map classes and match the classes that the model was trained on to their corresponding classes in the dataset.

4. Set a confidence threshold

Set the percentage confidence score above which you want your model to create labels.

If the threshold is set to 70%, for example, the model will not generate any labels in cases where it is less than 70% sure that it has correctly identified the object of interest.

Annotate!

The Model Tool gives you three ways to annotate. You can draw a bounding box over a single object (like with traditional Auto-Annotate) and create a single label:

Draw a bounding box around a region of interest containing multiple objects to create multiple labels:

Or hit Cmd + Enter to label an entire file at once.

UndoHitting Cmd + Z will undo all annotations made with a single action. Creating and undoing multiple annotations at once with the Model Tool counts as a single annotation action.

Model Types

The example above focus on instance segmentation, but the Model Tool works with all model types that can be trained in V7.

Using a trained object detection model, the Model Tool will output bounding boxes:

Using a trained classification model, the Model Tool will classify files with tags at a file-level, either after annotating a single region, or the entire file:

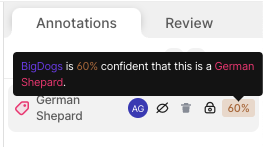

Confidence scores

Confidence scores will be displayed on a label-level whenever a trained model is used to create a label with Auto-Annotate.

You can also use a confidence threshold to prevent files with low confidence scores from being pushed through to a model stage when sending files to the next stage one-by-one.

To do this, create a workflow with a Being Annotated stage, followed by a Model stage.

In the first stage, equip Auto-Annotate and set your desired confidence threshold before sending files to the Model stage any files that do not meet this threshold will not be blocked from moving to the next stage.

Updated 5 months ago