Suggested Workflows

V7's workflows enable you to create a limitless number of custom training data pipelines. Here are some of our recommended workflows broken down by use-case to give you some inspiration.

External Annotation

If you're working with an external workforce like V7's Labelling service, set up a secondary review stage to review all or a percentage of the files that their team has annotated and reviewed

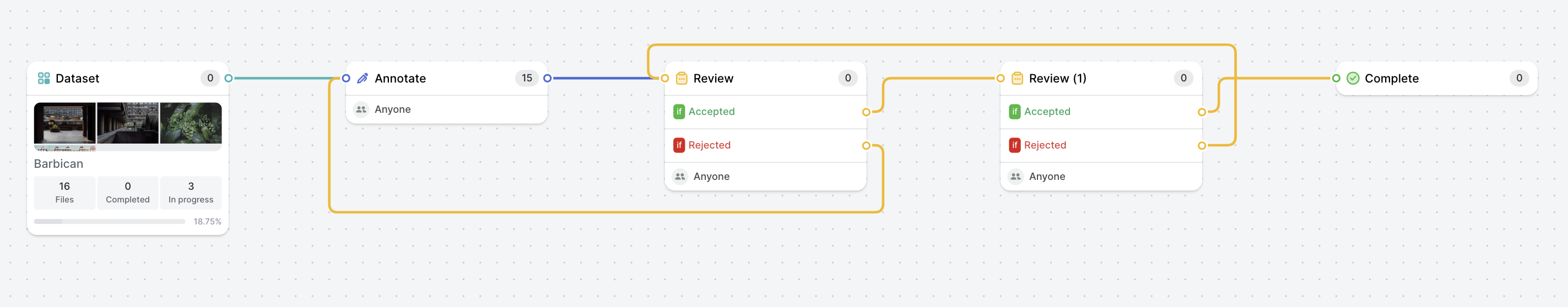

Example 1 - Standard external annotation flow

- External workers add annotations

- External reviewers review annotations against instructions

- Internal reviewers perform a final review

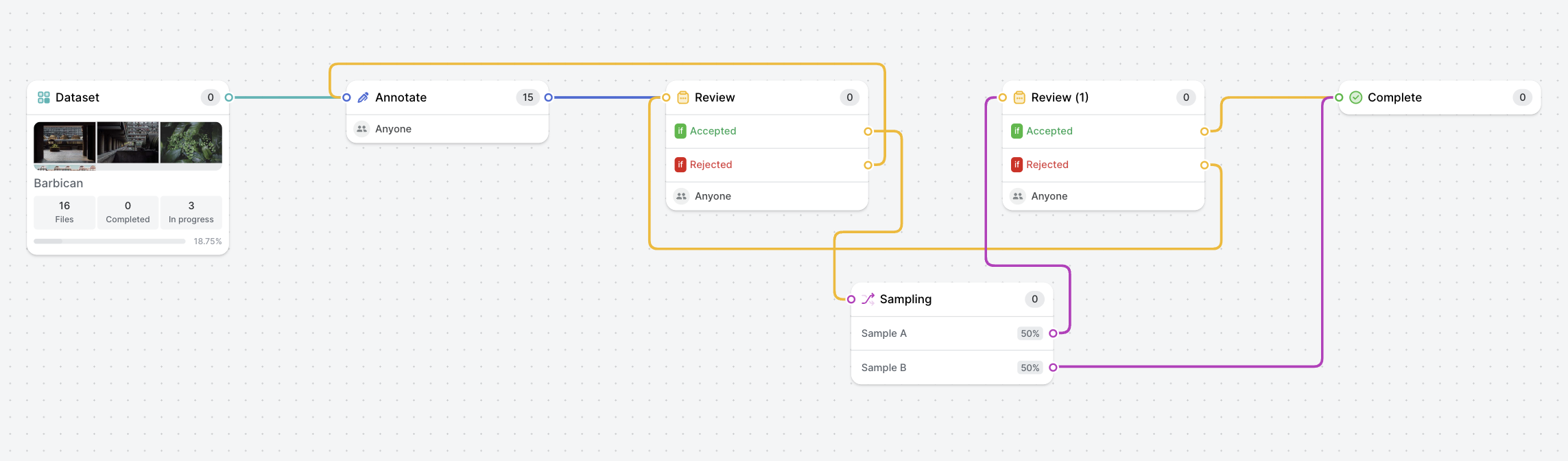

Example 2 - Sampled external annotation flow

- External workers add annotations

- External reviewers review annotations against instructions

- A 50% sample of files is sent for internal review

- Internal reviewers perform a final review

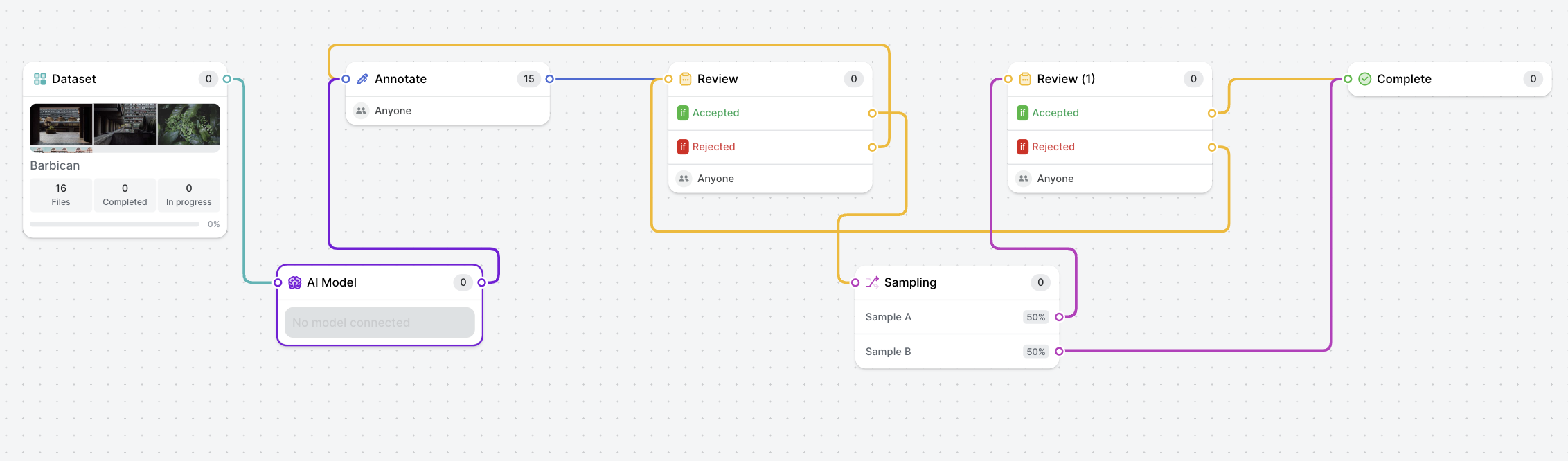

Example 3 - Model-assisted sampled external annotation flow

- Initial annotations are made using a model

- External workers review model-created annotations, add missing instances, and correct model output where needed

- External reviewers review annotations against instructions

- A 50% sample of files is sent for internal review

- Internal reviewers perform a final review

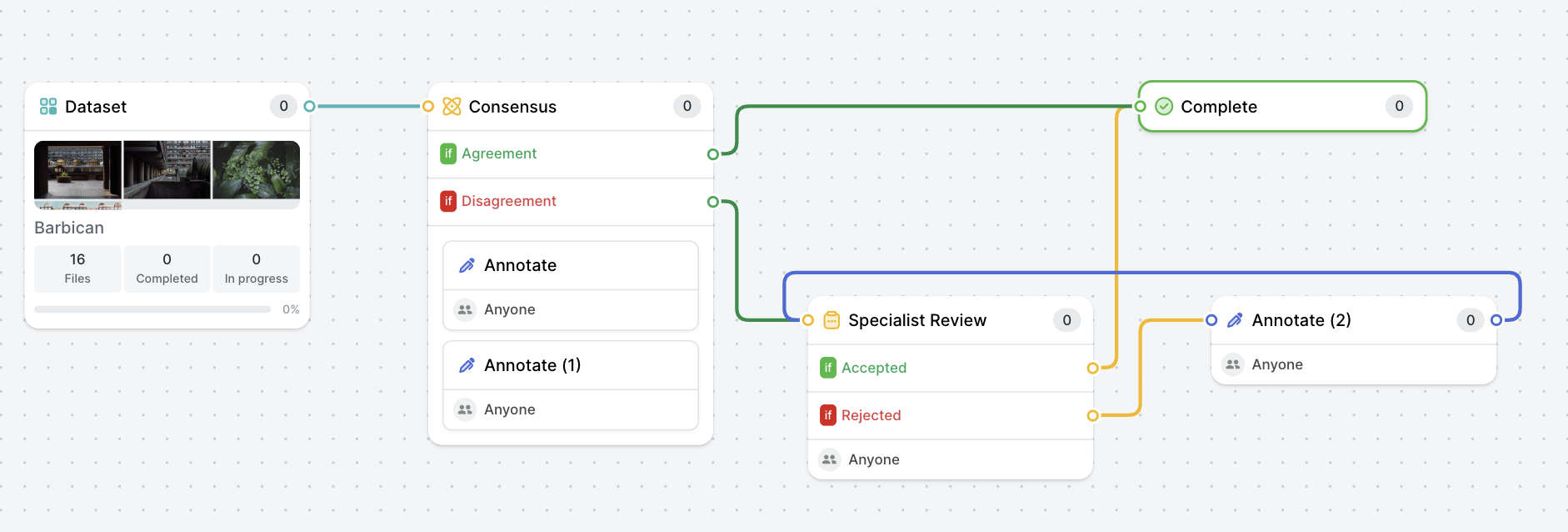

Specialist Reviewers

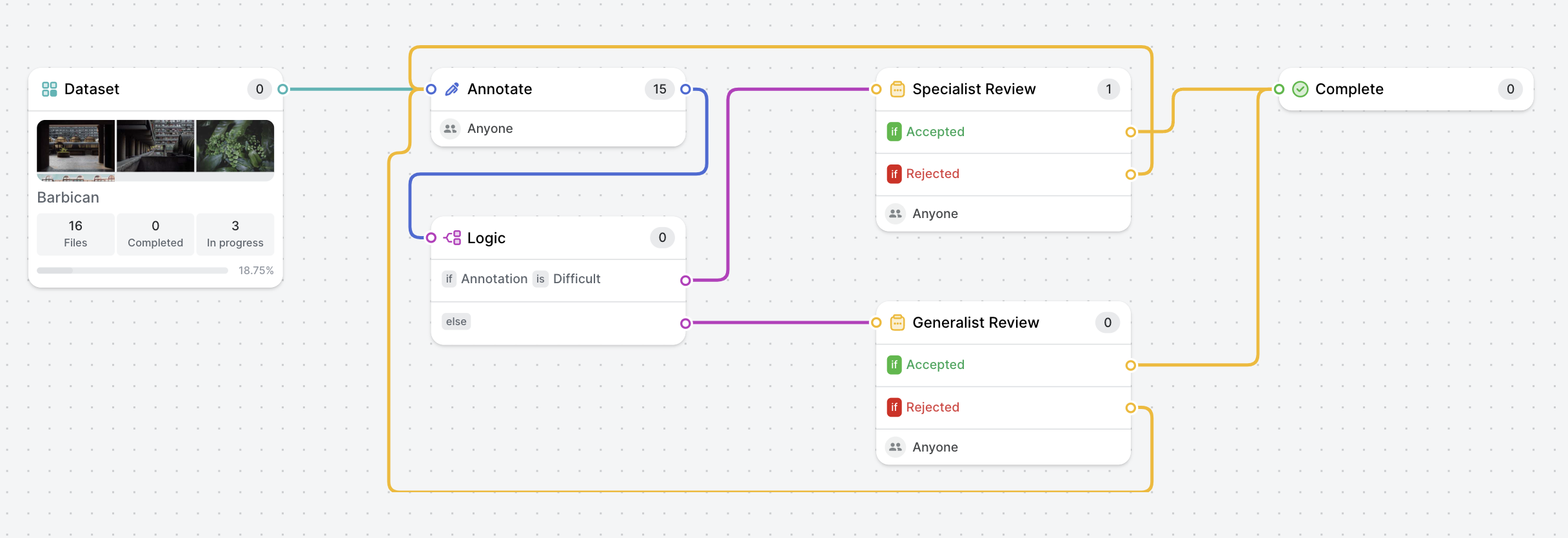

Example 1 - Specialist review routed by tags/classes

- Workers add annotations including "Difficult" tag for cases requiring specialist review

- Files are routed to the specialist review if they contain the "Difficult" tag or the generalist review if they do not

- Reviewers perform a final review

Example 2 - Consensus review

- Workers annotate blindly in parallel

- Files are routed to the review stage if the annotations they contain do not meet IoU thresholds set by workflow creator. The reviewer manually discards incorrect annotations.

- Rejected files are sent to a separate annotation stage.

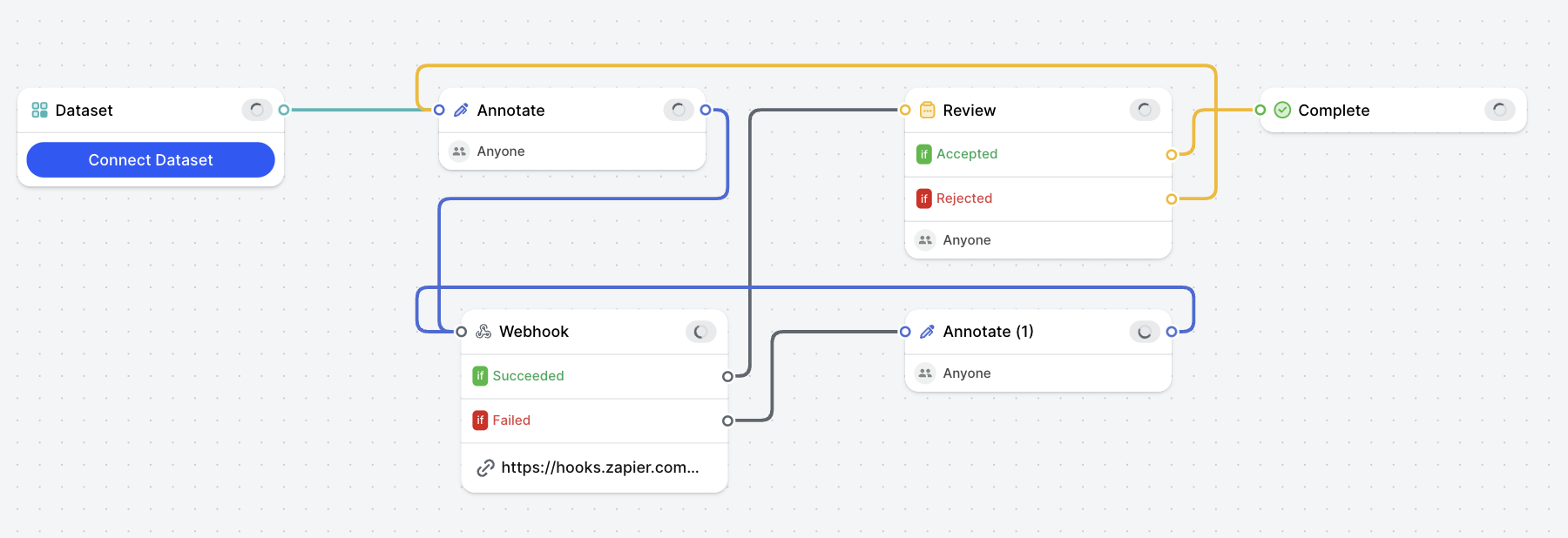

External Automation

Example 1 - Custom automation flow

- Workers add annotations

- Annotation data/file metadata payload is sent via a webhook, triggering a custom automation (this can be a no-code solution like Zapier, or an Object Lambda Function). This automation can incorporate V7's SDK to add a comment/annotation/change an annotation stage

- Failed files are sent to a separate Annotate stage where they can be filtered for and reviewed

- Reviewers perform a final review

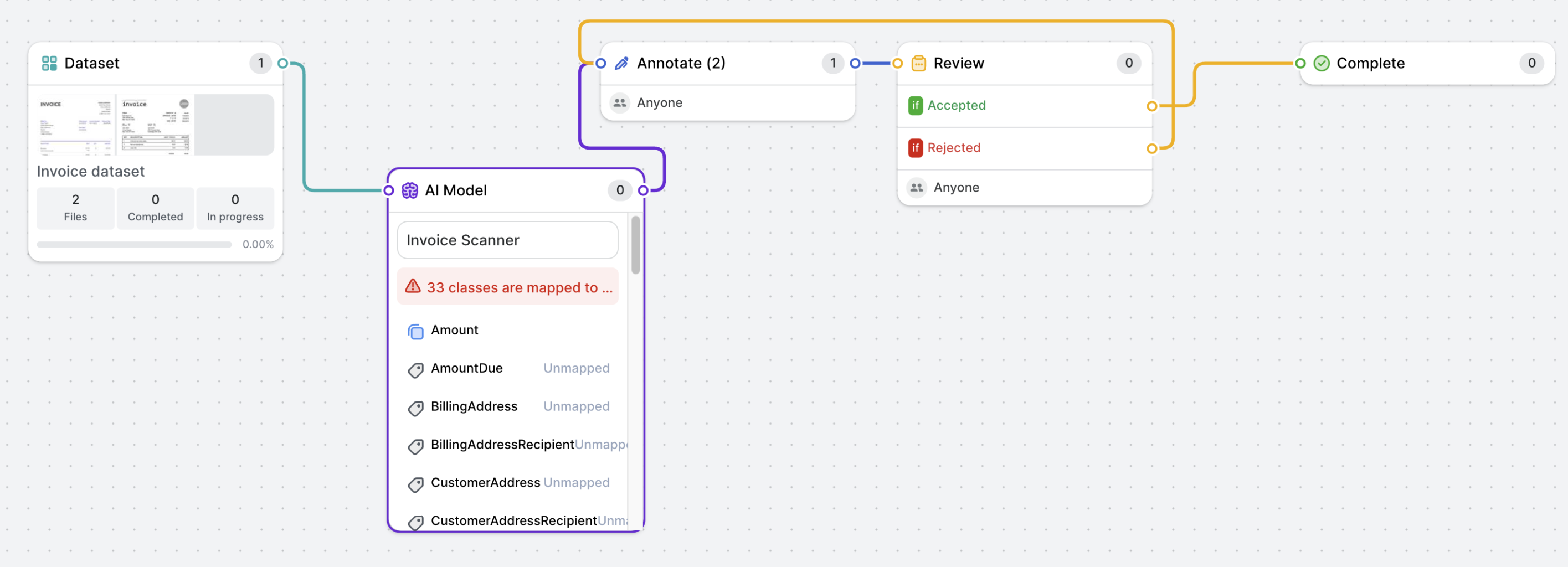

Document Processing

Example 1 - Receipt scanner flow

- Text is pulled into bounding box/polygon fields using one of the Text Scanner/Invoice Scanner/Receipt Scanner models

- Workers review model-created annotations, add missing instances, and correct model output where needed

- Reviewers perform a final review

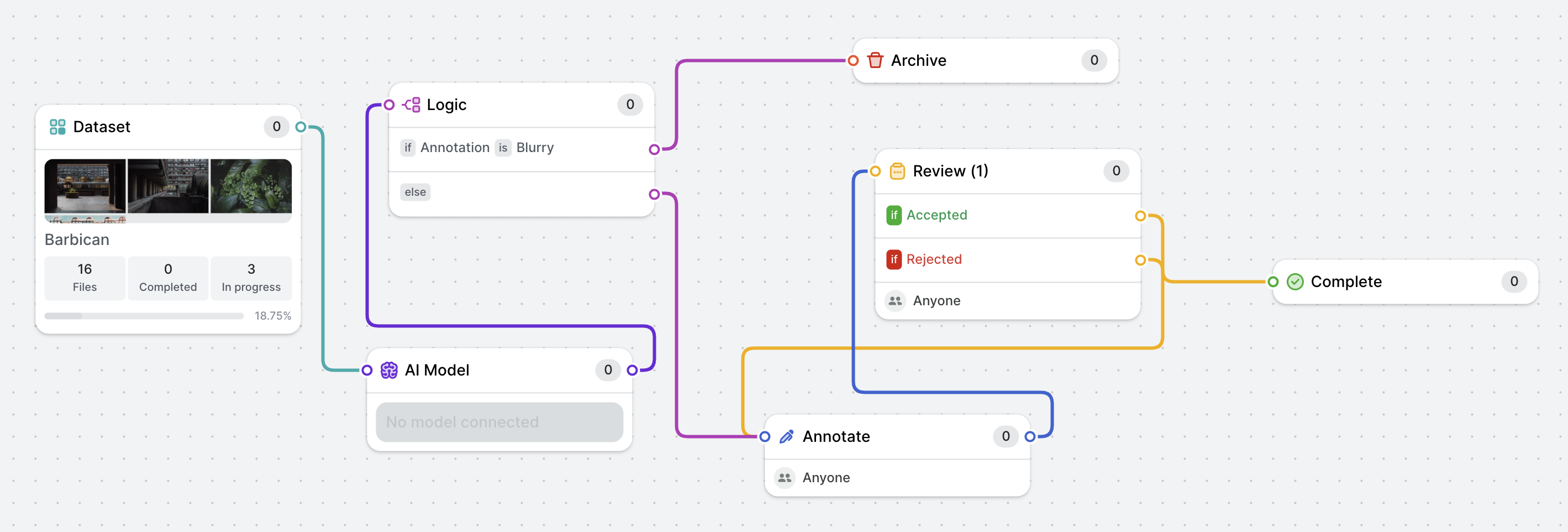

Dataset Optimisation

Example 1 - Remove corrupt data

- "Blurry/Corrupt" tag is added to applicable files using a simple classification model

- Files are routed to the annotation workflow or to an Archive stage if they contain a "Blurry/Corrupt" tag

- Workers add annotations

- Reviewers perform a final review

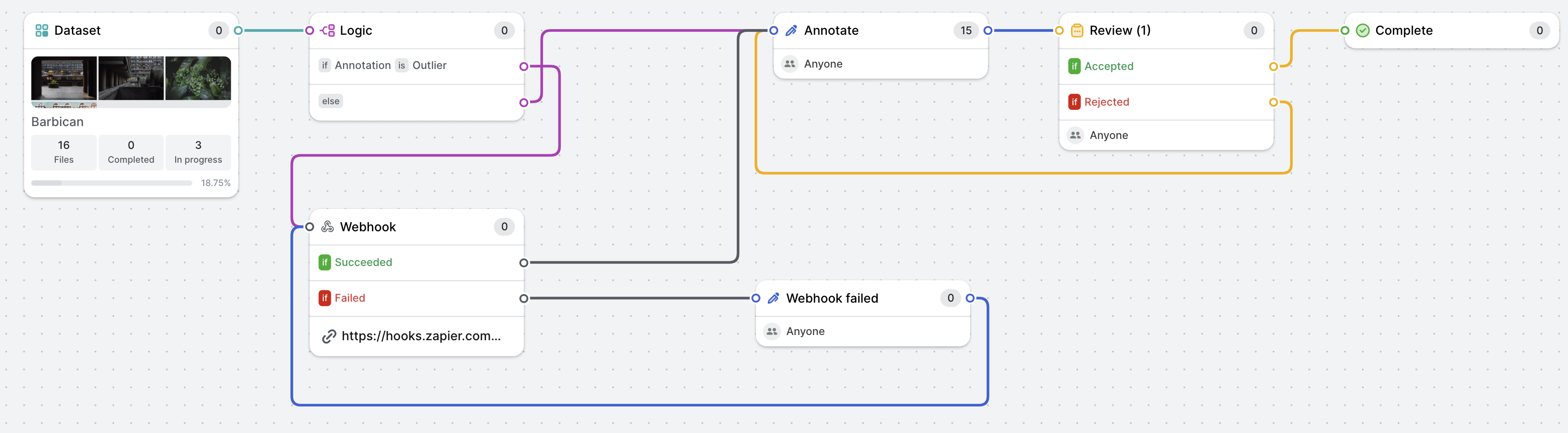

Example 2 - Identify abnormal data

- Files are run through a custom script prior to upload which applies an "Outlier" tag to files that are dissimilar to the rest of the dataset.

- Files are routed to the annotation workflow or to a Webhook stage if they contain a Blurry/Corrupt tag

- "Outlier" files metadata is sent to an external database (can be as simple as a Google Sheet using a Zapier automation) so similar files can be sourced and added to the dataset

- Workers add annotations

- Reviewers perform a final review

Updated 5 months ago