Darwin ✕ Detectron2

This tutorial shows how to train Detectron2 models in your Darwin datasets. If you do not have Detectron2 installed yet, please follow first these installation instructions.

Detectron2 organizes the datasets in DatasetCatalog, so the only thing we will need to do is to register our Darwin dataset in this catalog. For this, darwin-py provides the function detectron2_register_dataset, which takes the following parameters:

detectron2_register_dataset(dataset_slug [, partition, split, split_type, release_name, evaluator_type])

Input

----------

dataset_slug: Path, str

Slug of the dataset you want to register

partition: str

Selects one of the partitions [train, val, test]. If None, loads the whole dataset. (default: None)

split

Selects the split that defines the percetages used (use 'default' to select the default split)

split_type: str

Heuristic used to do the split [random, stratified] (default: stratified)

release_name: str

Version of the dataset. If None, takes the latest (default: None)

evaluator_type: str

Evaluator to be used in the val and test sets (default: None)

Output

----------

catalog_name: str

Name used to register this dataset partition in DatasetCatalogTraining

Here's an example of how to use this function to register a Darwin dataset, and train an instance segmentation model on it. First, and as we did before, we will start by pulling the dataset from Darwin and splitting it into train and validation from the command line:

darwin dataset pull v7-demo/bird-species

darwin dataset split v7-demo/bird-species --val-percentage 0.1 --test-percentage 0.2Now, in Python, we will import some detectron2 utils and we will register the Darwin dataset into Detectron2's catalog.

import os

# import some common Detectron2 and Darwin utilities

from detectron2.utils.logger import setup_logger

from detectron2 import model_zoo

from detectron2.engine import DefaultTrainer

from detectron2.config import get_cfg

from detectron2.data import MetadataCatalog, build_detection_test_loader

from detectron2.evaluation import COCOEvaluator, inference_on_dataset

from darwin.torch.utils import detectron2_register_dataset

setup_logger()

# Register both training and validation sets

dataset_id = 'v7-demo/bird-species'

dataset_train = detectron2_register_dataset(dataset_id, partition='train', split_type='stratified')

dataset_val = detectron2_register_dataset(dataset_id, partition='val', split_type='stratified')Next, we will set up the model and the training hyper-parameters, and launch the training loop.

# Set up training configuration and train the model

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.DATASETS.TRAIN = (dataset_train,)

cfg.DATASETS.TEST = (dataset_val,)

cfg.DATALOADER.NUM_WORKERS = 2

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")

cfg.SOLVER.IMS_PER_BATCH = 8 # batch size

cfg.SOLVER.BASE_LR = 0.005 # pick a good LR

cfg.SOLVER.MAX_ITER = 5000 # and a good number of iterations

cfg.SOLVER.STEPS = (4000, 4500) # milestones where the LR is reduced

cfg.MODEL.ROI_HEADS.NUM_CLASSES = len(MetadataCatalog.get(dataset_train).thing_classes)

# Instantiate the trainer and train the model

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

trainer = DefaultTrainer(cfg)

trainer.resume_or_load(resume=True)

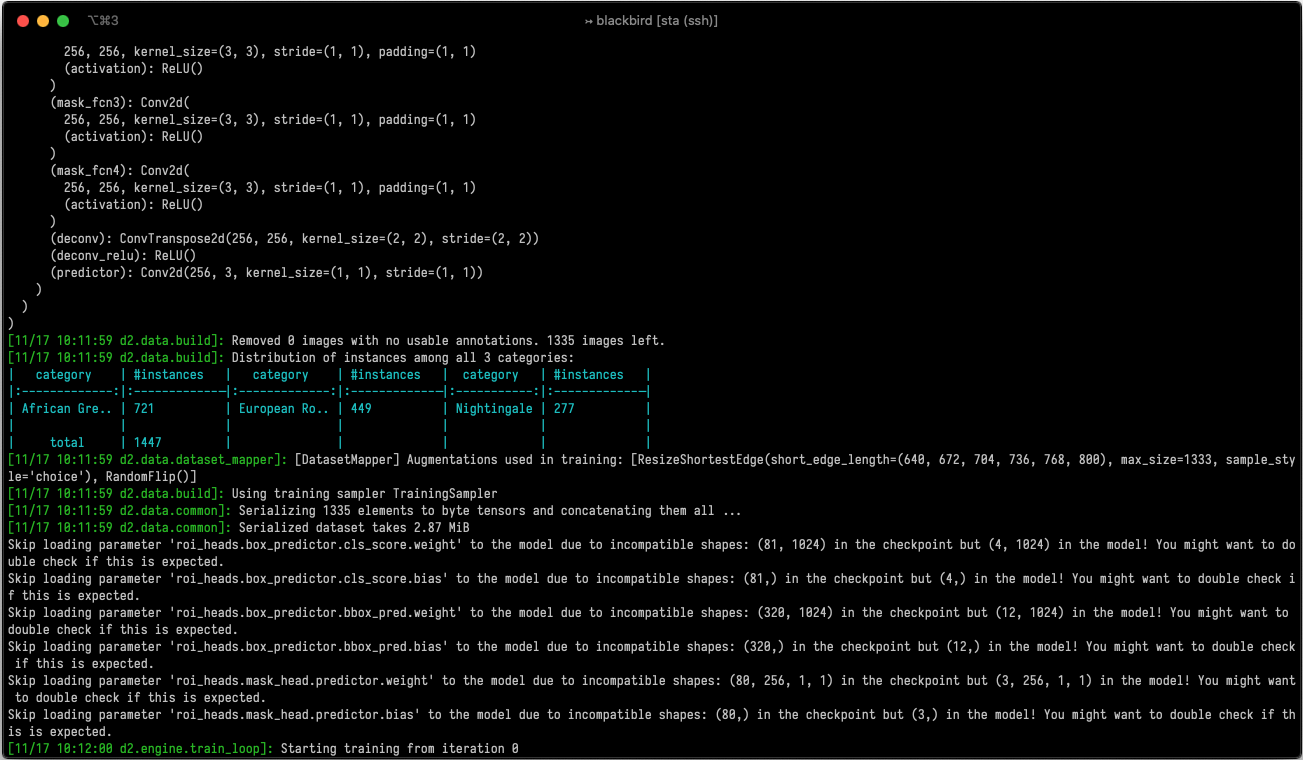

trainer.train()At this point, the training should have started and you should see this:

Diving into Detectron2To know more about the different training parameters, the different models and architectures available, or to know how to extend the training class to do your own customized training loops, visit Detectron2's GitHub.

Evaluation

After 5000 iterations, which should take less than 2 hours on a single GPU, the training will stop and the final weights of our model will be stored in cfg.OUTPUT_DIR. Now, we can evaluate the model's performance using the built-in COCO evaluator,

# Evaluate the model

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth")

evaluator = COCOEvaluator(dataset_val, cfg, False, output_dir="./output/")

val_loader = build_detection_test_loader(cfg, dataset_val)

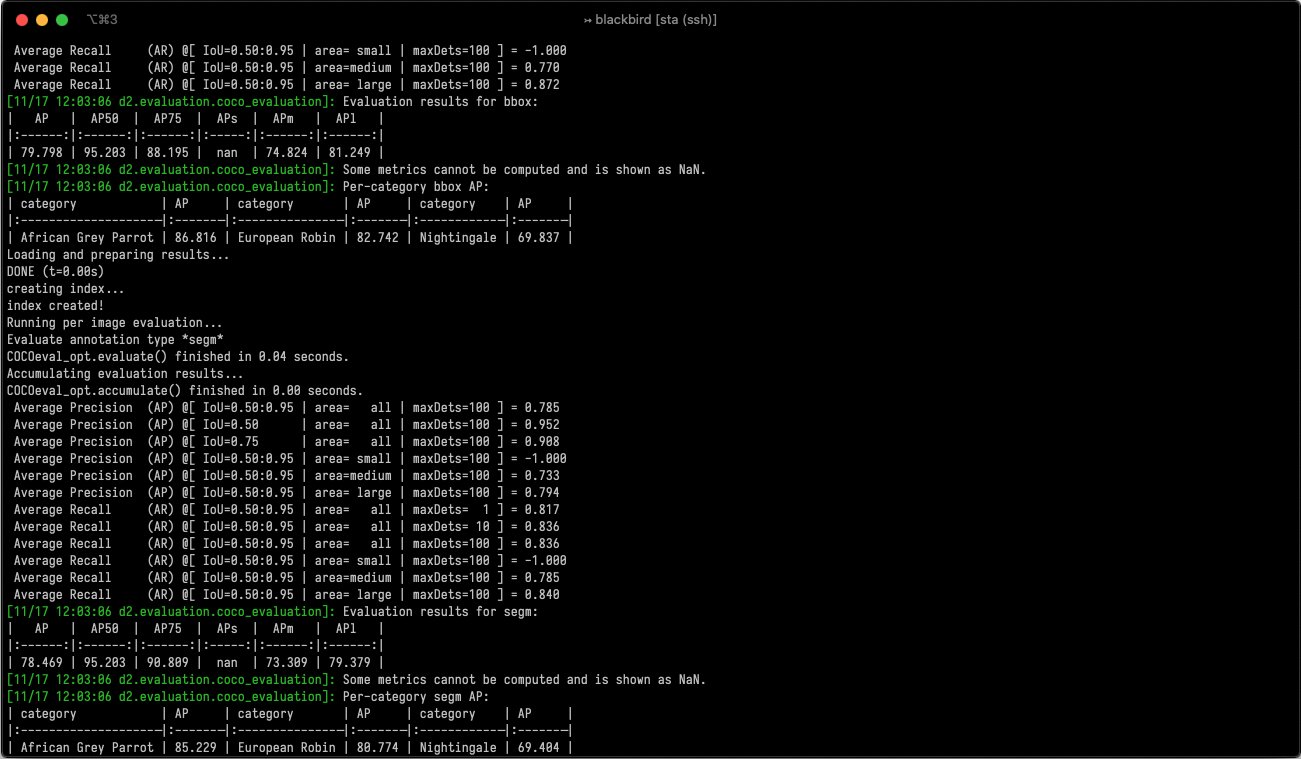

inference_on_dataset(trainer.model, val_loader, evaluator)which should return these approximate numbers:

Inference

Last, we can use Detectron2's predictor class to run inference on images using the model that we just trained. We can do that using the following code:

import numpy as np

from PIL import Image

from detectron2.engine import DefaultPredictor

from detectron2.utils.visualizer import Visualizer

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth")

predictor = DefaultPredictor(cfg)

im = np.array(Image.open('/home/jon/.darwin/datasets/v7-demo/bird-species/images/00004505.jpg'))

outputs = predictor(im[:, :, ::-1])

v = Visualizer(im, MetadataCatalog.get(cfg.DATASETS.TRAIN[0]))

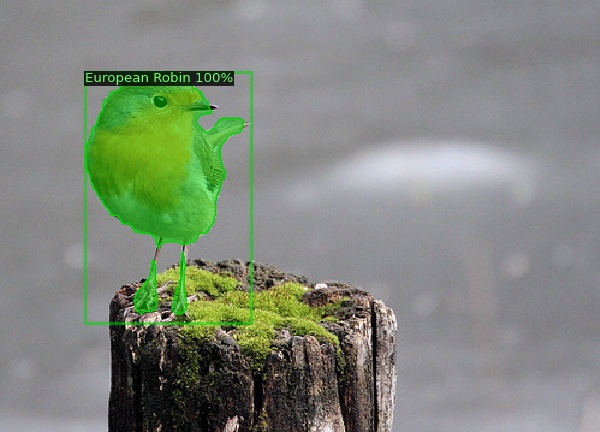

out_image = v.draw_instance_predictions(outputs["instances"].to("cpu"))If you visualize out_image you should see something like this:

Updated 5 months ago