Streaming Files From AWS S3 into Darwin

Automatically register newly added files to an S3 bucket in Darwin using an AWS Lambda function

Once you've connected an S3 bucket to your team, you may want to automatically register incoming files to the S3 bucket with a V7 dataset. This can be achieved with an AWS Lambda function that is triggered each time a file is uploaded to your S3 bucket as described below:

AWS Service LocationsIn order for this to work, your S3 bucket & Lambda function have be present in the same AWS region (e.g. eu-west-1)

Basic Information

- 1: In AWS Lambda, select Functions > Create function, then select Use a blueprint

- 2: Under "Blueprint name", select Get S3 object python 3.7. We use Python 3.7 here because it was the last AWS Lambda runtime that bundled the requests library by default. Newer runtimes (Python 3.8+) require packaging requests manually or adding it as a Lambda layer.

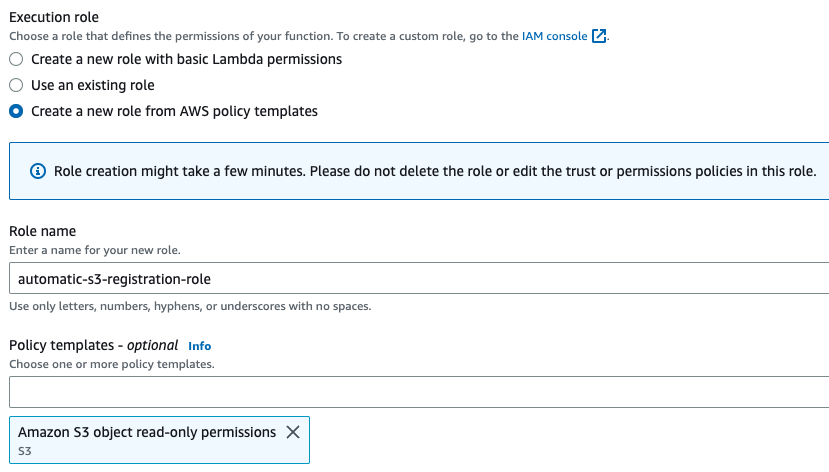

- 3: Enter your desired "Function name" and then select Create a new role from AWS policy templates

- 4: Enter your desired "Role name", then ensure the "Amazon S3 object read-only permissions" policy template is selected:

S3 Trigger

Scroll down to the "S3 trigger" section. Select Remove, and then Create Function:

In the Lambda function menu under "Function overview":

- 1: Select + Add trigger

- 2: Select S3 as the source and select the bucket files are to be registered from

- 3: Ensure "Event type" is set to "All object create events"

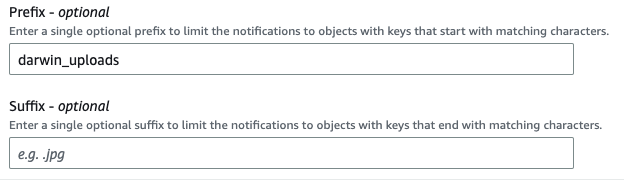

Recursive InvocationThis next step is critically important to avoid recursive invocation.

If Darwin has read & write access to your bucket, you must do the following:

- 1: Specify a Prefix that is a path to a folder that will contain your items to be registered

- 2: The location specified by this prefix must not contain the folder that Darwin-generated thumbnails are uploaded to. If it does, thumbnails will be registered, processed, and registered again in a loop.

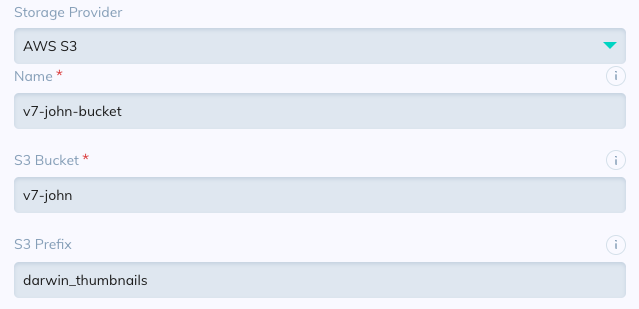

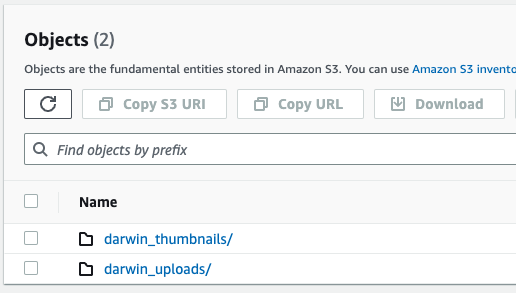

For example, see the Darwin, S3, & Lambda trigger configuration below:

In this case, recursive invocation will not occur because:

- 1: Only items uploaded to "darwin_uploads" are registered as per the "Prefix", and:

- 2: Thumbnails are uploaded to the "darwin_thumbnails" folder that sits outside of "darwin_uploads",

Finally, check the Recursive invocation acknowledgement and select Add.

Even if Darwin has read-only access to your bucket, the above is still recommended to avoid recursive invocation if the bucket permissions change at any point in the future.

Lambda Code

In the Lambda function menu, scroll down to "Code", then under the "lambda_function" tab under "Code source" enter the following code, making sure to update the following fields:

- api_key

- team_slug

- dataset_slug

- storage_name

Then select Deploy.

Clicking Deploy will cause your trigger to go live, meaning files uploaded from this point onward will trigger the function.

import json

import urllib.parse

import boto3

import requests

s3 = boto3.client('s3')

def lambda_handler(event, context):

api_key = "ApiKey"

team_slug = "team-slug"

dataset_slug = "dataset-slug"

storage_name = "storage-name-in-v7"

# Account for certain special character encodings

encodings = {

"%21": '!',

"%22": '"',

"%24": '$',

"%26": '&',

"%27": "'",

"%28": '(',

"%29": ')',

"%2A": '*',

"%2C": ',',

"%3A": ':',

"%3B": ';',

"%3D": '=',

"%40": '@',

}

storage_key = event['Records'][0]['s3']['object']['key']

for key in encodings:

storage_key = storage_key.replace(key, encodings[key])

file_name = storage_key.rsplit('/', 1)[-1]

headers = {

"Content-Type": "application/json",

"Accept": "application/json",

"Authorization": f"ApiKey {api_key}"

}

payload = {

"items": [

{

"path": "/",

"storage_key": f"{storage_key}",

"name": f"{file_name}"

}

],

"dataset_slug": dataset_slug,

"storage_slug": storage_name

}

response = requests.post(

f"https://darwin.v7labs.com/api/v2/teams/{team_slug}/items/register_existing",

json=payload,

headers=headers,

)

File name issuesPlease note that certain characters in filenames can cause errors if not handled properly. Please review the guidance of AWS on this here under Object key naming guidelines.

Please note the following about the code above:

- The filename passed to Darwin is the filename in S3. To support a different file naming convention, the code needs to be altered.

- The files will be registered in the base directory of the dataset. If you wish to register files in a specific dataset folder, you'll need to update the value of "path".

- While any individually upload-able item is supported, this trigger works by sending a registration request for each individual item. This means multiple items cannot be registered together in different slots using this code structure.

Finally, the above example simply registers all uploaded files to the same dataset in Darwin. If you'd like to use the same Lambda function for multiple datasets, this is possible with some modification to the code. You simply need to match part of the storage key or filename to a dataset slug, then alter the dataset_slug field in the payload accordingly.

An example of how this may be achieved is displayed below, where files are registered with different datasets based on the first 2 characters of the filename. Then, "dataset_slug" is passed into the payload as above.

dataset_dict = {

"12": "dataset-1",

"13": "dataset-2",

"14": "dataset-3"

}

try:

dataset_slug = dataset_dict[file_name[0:2]]

except KeyError as e:

print("No match found, assigning to fallback dataset")

dataset_slug = 's3-integration'If you encounter any issues or have any questions feel free to contact us at [email protected].

Updated 3 months ago