The Consensus Stage

Consensus stages enable the quality assurance of annotations by measuring the extent of agreement between different annotators (and/or models) and allowing the selection of the best annotation when there is a disagreement. This page describes how to effectively use Consensus Stages in your workflows.

Overview

A Consensus Stage has three parts: the stage setup, annotation and review. This can best be understood via an example, as described below.

A medical team has 2 radiologists and a cancer segmentation model which it wants to use to annotate cancerous/non-cancerous DICOM images. At a high level:

- The workforce manager sets up a Consensus Stage and assigns the 2 radiologists and the model to parallel stages within the Consensus stage.

- The annotators request work and independently annotate the items in the Consensus Stage, whilst the model generates its predictions.

- If there is disagreement between the annotators and/or model, the items enter a Review Stage, where the workforce manager reviews and resolves any disagreements.

How to Setup a Consensus Stage

- Add a Consensus Stage to your workflow in the workflows editor.

- Add and specify which models and/or annotators will participate in the Consensus Stage. You can add multiple parallel stages and annotators per parallel stage.

Consensus - User roles

To ensure that all annotations made in a consensus stage are blind, annotators taking part in the stage should be set up with Worker-level permissions. Because they are able to see all data within a V7 team, Users and Admins will be able to see the annotations created by other users within the same stage.

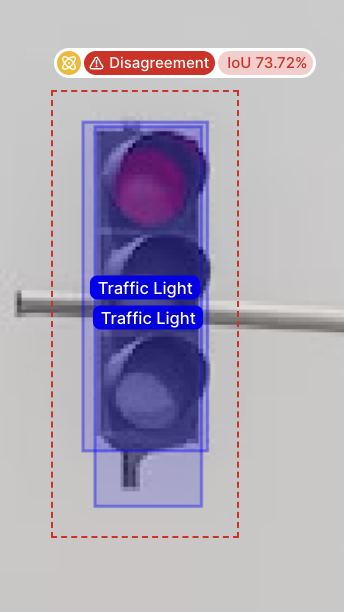

- Configure the Consensus threshold per class. This specifies the minimum IoU score required for an item to automatically pass through the Consensus Stage.

- Specify which model or annotator will act as your Champion Stage. If an item automatically passes through a Consensus Stage due to an agreement, the Champion Stage's annotation is deemed as the ‘correct’ annotation and is used moving forward.

- Save your workflow and let your annotators request work.

Admin/User/Workforce Manager ExperienceIf you are a V7 user with the Admin, User, or Workforce Manager role, you need to assign yourself to images in the consensus stage to be able to annotate.

If this step is not completed you will not be able to access the annotation tools to start annotating.

Self-AssignmentAs an automated stage, the Consensus Stage requires files to be assigned to it in order to be triggered.

As a result, if a Consensus Stage is the first stage after the Dataset Stage in a workflow, it will be necessary for a User, Admin, or Workforce Manager to assign files to the stage manually to kick off the workflow. Workers will not be able to self-assign files until they have passed through the Consensus Stage.

How to Review Disagreements

- After all annotators and/or models have added their annotations, the disagreements can be reviewed in a review stage.

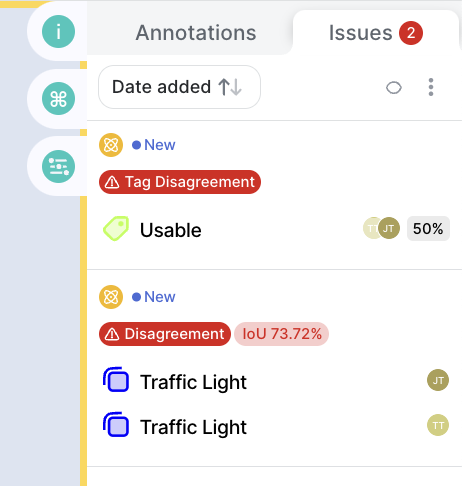

- In the annotation panel, go to the "issues" tab and view the disagreements

- To resolve a disagreement, either accept a single annotation, or delete other annotations in the disagreement until you have the “winning” annotation left.

Parallel Blind Reads with the Consensus Stage

A common use case for the Consensus Stage is for having multiple labelers annotate the same image blindly in parallel.

In medical use cases where assessment can be subjective, and one reader is not necessarily more correct than the other - the Consensus Stage allows you to collect multiple readers opinions for analysis of inter-reader variability on your dataset.

To use the Consensus Stage for Parallel Blind Reads, set the disagreement threshold to 100%. This will mean all annotations pass through to the next stage.

Supported Classes

V7's consensus stage is compatible with images, videos, DICOMs and PDFs, for any number of annotators and/or models.

V7 supports automated disagreement checking for:

- Bounding Boxes (IoU)

- Polygons (IoU)

- Tags (voting)

V7 allows you to use the consensus stage for blind parallel reads (without automated disagreement checking) for the following classes:

- Polylines

- Ellipses

- Keypoints

- Keypoint Skeletons

This means you can still do a simple automated review in the V7 Platform. If you have a consensus use case for one of these annotation types, please ask us to turn this setting on for you by contacting support or via your dedicated Customer Success Manager.

Sub-Attributes & Item Level PropertoesSub-Attributes and Item Level Properties are not supported in consensus analysis - e.g. if two annotators tag an image "Dog", but one says "Golden Retriever" and the other says "Corgi" this would be considered an agreement, and the sub-attributes may be lost.

Updated 5 months ago